My research Journey

Project Research time: 2025

Project Title: Brain Computer Interfaces as Clinical Tools for Neurodegenerative Diseases

Research Institute: Neurotech Intern (ThinkNeuro)

Research poster link: Visit the completed research poster

BCIs give neurodegenerative disease patients a voice when speech through words and mobility are lost. Because progress in medicine isn’t just about curing the next disease, it’s about improving the quality of life of those, especially in underresearched areas, along the way, so that no patient is left behind.

My research team:

Evaluated the role of brain-computer interface (BCI) technologies in enhancing rehabilitation, diagnostic processes, and communication for neurodegenerative disease patients.

Assessed existing applications and their clinical effectiveness across various therapeutic areas.

Identified gaps and trends in the existing literature to determine under-researched areas and provide recommendations.

Abstract

Project Research time: 2024-2025

Project Title: Synthetic Politics: Prevalence, Spreaders, and Emotional Reception of AI-Generated Political Images on X

Research Institute: USC Information Sciences Institute Research Assistant (HUMANS Lab)

Published research paper on ACM and received the Best Student Paper Honorable Mention at ACM Conference on Hypertext 2025 : Visit the published research on ACM

Submitted research paper link: Visit the published research

ACM HyperText 2025 conference accepted paper: “Synthetic Politics: Prevalence, Spreaders, and Emotional Reception of AI-Generated Political Images on X”

Artificial Intelligence isn’t just shaping the future of technology; people’s perceptions are on the line with its vast impact. In many instances, we don’t notice how political images affect our emotions, and it is very simple for AI images to exploit our trust in democracy, blurring the line of truth and manipulation.

As the visual algorithm of persuasion, AI images both express and distort emotions, and it is important to understand how to ethically utilize AI for civic engagement, especially following the 2024 presidential election.

I researched the emotional sentiment in AI image tweets versus non-AI image tweets. This is an important issue to address due to the spread and adoption of misinformation, the erosion of public trust, and the incitement of violence.

I started with detecting AI images and identifying superspreaders (highly influential political users) using GPT-4o and OpenCV, and for each image superspreader, I sampled 300 tweets. For each tweet, I web scraped the comments, and in total, I web scraped 10,000 political comments. Lastly, I conducted tweetNLP emotional analysis on each comment for the emotional sentiment, with the 11 emotions being anger, anticipation, disgust, fear, joy, love, optimism, pessimism, sadness, surprise, and trust.

After assessing and comparing the averages of each emotion for the AI versus non-AI images using the Mann Whitney U test, I found that the comments under AI image tweets had more positive emotions (with higher levels of joy, optimism, love, and trust) while the comments under non-AI image tweets had more negative emotions (with higher levels of anger, anticipation, disgust, fear, pessimism, sadness, and surprise). All these levels were statistically significant, with p-values less than 0.05, with the exception of surprise.

Abstract

Project Research time: 2023

Project Title: How Accurate Are Cognitive Tests in Predicting Alzheimer’s Disease Diagnosis?

Research Location: University of California Irvine

Abstract

Alzheimer's disease (AD), a leading type of dementia, is a prevalent neurodegenerative

condition amongst the older population. Though incurable, treatment options for AD are

available, thus, recognizing its presence early on is beneficial. However, despite its effectiveness,

getting a diagnosis via brain scan can be costly and time-consuming. In our research, we focused

on using cognitive tests, a quick and inexpensive option that can be administered by primary care

physicians, to accurately diagnose AD. The National Alzheimer’s Coordinating Center (NACC)

dataset contained the scores of 2,700 test subjects taking six different cognitive tests and their

diagnosis of either healthy (0), mild cognitive impairment (MCI) (1), or dementia due to AD (2).

We constructed three box plots for each test, separated by diagnosis level, and altered our dataset

so that diagnosis was a binary variable with value 0 for healthy subjects and value 1 for subjects

with MCI or dementia due to AD. To measure the accuracy of our model through

cross-validation, we split our modified dataset into training (80%) and testing (20%) and created

logistic regression models for diagnosis, with various arrangements of predictors, eliminating

irrelevant predictors (p-value > 0.05). The models that were used included multiple simple

logistic regression models, a logistic regression model with multi-layered neural network (NN),

and a Random Forest model. We found that the simple logistic regression model worked the best

and fitting logistic regression models made from a dataset by the NACC, the combination of four

different cognitive tests out of the six we studied—the Animals Test, the Trail Making Test Part

B, the Logical Memory Test, and the Mini-Mental State Examination (MMSE)—produced the

best result, with an 85% accuracy. This research offers primary care physicians a prompt and

efficient method for the early diagnosis of AD in the elderly, enabling timely initiation of

symptom management.

In the context of bone fracture detection, the research study titled “Revolutionizing Bone Fracture Detection: YOLOv5 vs. YOLOv8 Face-off” comprehensively assessed the performance of two state of-the-art YOLO (You Only Look Once) object detection algorithms, namely YOLOv5 and YOLOv8, across different model configurations, including small (s), medium (m), and large (l) variants. These six YOLO models underwent rigorous training, validation, and testing phases, employing three distinct datasets. One dataset exclusively consisted of wrist fractures, while the remaining two contained a mix of bone fracture types. The evaluation of model performance was based on precision, F1 score, and recall confidence ratings, and the results were presented through various visualization methods, including tables, images, and graphs. Ultimately, the study established that YOLOv5 and YOLOv8 demonstrate comparable detection capabilities, with YOLOv5 occasionally exhibiting superior accuracy in detecting fractures of varying sizes.

Project Research time: 2022-2023

Project Title: Revolutionizing Bone Fracture Detection: YOLOv5 vs. YOLOv8 Face-off

Submitted research paper for publication in The National High School Journal of Science

Project Research time: 2021-2022

Project Title: Early Skin Cancer Detection of Basal Cell Carcinoma, Squamous Cell Carcinoma, and Malignant Melanoma through Artificial Intelligence

Project website link: Visit the completed project slides and lab logs.

Project Research time: 2020-2021

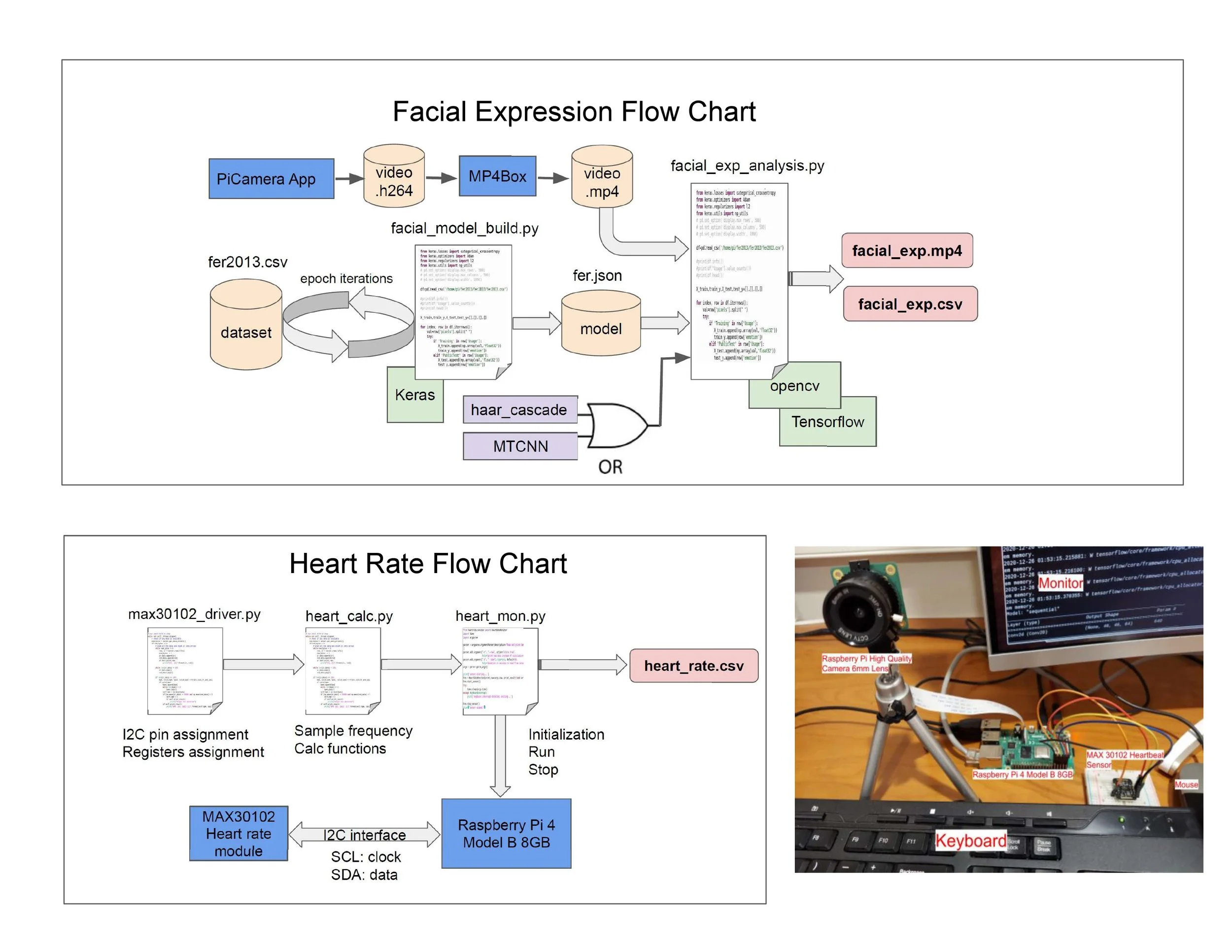

Project Title: Computer Vision with Biometric Information for Engagement Detection in Virtual Learning

Project website link: Visit the completed project slides and lab logs